CNN (Convolutional Neural Network) Explanation Part. 3

Part 3. CNN's Trend and Future

CNN's Latest Research Trends

Lightweight Models

Lightweight CNN models have become increasingly important due to the growing demand for efficient deep learning solutions on resource-constrained devices. Here's a comparison of some popular lightweight models:

| Model | Top-1 Accuracy (ImageNet) | Parameters | FLOPS |

|---|---|---|---|

| MobileNetV1 | 70.6% | 4.2M | 569M |

| MobileNetV2 | 72.0% | 3.4M | 300M |

| MobileNetV3-Large | 75.2% | 5.4M | 219M |

| EfficientNet-B0 | 77.1% | 5.3M | 390M |

| EfficientNet-B7 | 84.3% | 66M | 37B |

Key innovations in lightweight models include:

- Depthwise separable convolutions (MobileNet)

- Inverted residuals and linear bottlenecks (MobileNetV2)

- Squeeze-and-excitation modules (MobileNetV3)

- Compound scaling (EfficientNet)

These techniques have significantly reduced model size and computational requirements while maintaining high accuracy.

Neural Architecture Search (NAS)

NAS has revolutionized CNN design by automating the process of finding optimal architectures. Here's a brief timeline of NAS development:

timeline

2016 : NAS with Reinforcement Learning

2018 : Progressive NAS

2019 : Efficient NAS

2020 : Once-for-All Network

2021 : Hardware-Aware NAS

Recent NAS approaches focus on:

- Reducing search time and computational cost

- Joint optimization of accuracy and efficiency

- Hardware-aware search to optimize for specific deployment platforms

Self-supervised Learning

Self-supervised learning has gained traction as a way to leverage large amounts of unlabeled data. Here are some popular self-supervised learning techniques for CNNs:

- Contrastive learning (e.g., SimCLR, MoCo)

- Masked image modeling (e.g., MAE, BEiT)

- Jigsaw puzzle solving

- Rotation prediction

These methods have shown impressive results, often producing features that transfer well to downstream tasks with limited labeled data.

Limitations and Solutions for CNNs

Data Efficiency Issues

To address data efficiency issues, researchers have developed various techniques:

- Few-shot learning

- Data augmentation

- Transfer learning

Here's a comparison of data augmentation techniques:

| Technique | Description | Effectiveness |

|---|---|---|

| Geometric transformations | Rotation, flipping, scaling | Moderate |

| Color jittering | Brightness, contrast, saturation adjustments | Moderate |

| Mixup | Linear interpolation of images and labels | High |

| CutMix | Patch-wise image mixing | High |

| AutoAugment | Learned augmentation policies | Very High |

Vulnerability to Adversarial Attacks

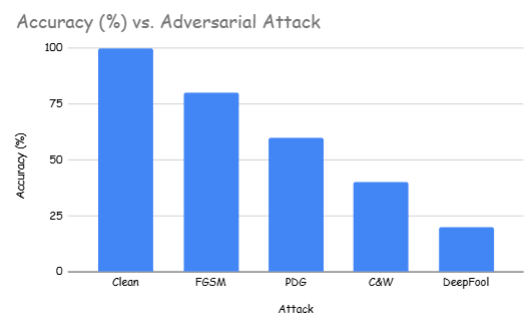

CNNs are susceptible to adversarial attacks. Here's a chart showing the impact of different adversarial attack methods on model accuracy:

Here's a breakdown of what the chart is illustrating:

- The vertical axis (y-axis) represents the accuracy of the model, ranging from 0% to 100%.

- The horizontal axis (x-axis) shows different scenarios:

- "Clean": This represents the model's performance on unmodified, clean images.

- "FGSM", "PGD", "C&W", "DeepFool": These are different types of adversarial attack methods.

- The chart shows a clear trend of decreasing accuracy as we move from clean images to various attack methods:

- On clean images, the model achieves 100% accuracy.

- With FGSM attack, the accuracy drops to about 80%.

- PGD attack further reduces the accuracy to around 60%.

- C&W attack brings the accuracy down to approximately 40%.

- DeepFool attack results in the lowest accuracy, at about 20%.

This chart illustrates how different adversarial attack methods can significantly impact the performance of a CNN model, with some attacks being more effective at fooling the model than others. It highlights the vulnerability of CNNs to carefully crafted adversarial examples and emphasizes the need for robust defense mechanisms.

Defensive techniques include:

- Adversarial training

- Defensive distillation

- Input preprocessing

- Certified defenses

Efforts to Improve Interpretability

Interpretability techniques for CNNs can be categorized as follows:

- Visualization techniques

- Grad-CAM

- Saliency maps

- Concept-based explanations

- TCAV (Testing with Concept Activation Vectors)

- Attention mechanisms

- Explainable AI frameworks

Fusion of CNNs with Other Technologies

Combining CNNs and Transformers

CNN-Transformer hybrid models have shown impressive results. Here's a comparison of some popular hybrid architectures:

| Model | Top-1 Accuracy (ImageNet) | Parameters |

|---|---|---|

| ViT-B/16 | 77.9% | 86M |

| Swin-T | 81.3% | 29M |

| ConvNeXt-T | 82.1% | 29M |

| CoAtNet-0 | 81.6% | 25M |

These models combine the strengths of CNNs in capturing local spatial information with the Transformer's ability to model long-range dependencies.

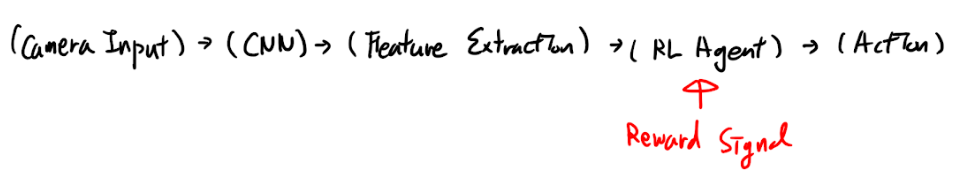

Integration of CNNs and Reinforcement Learning

CNNs have been successfully integrated with reinforcement learning in various applications:

- Deep Q-Networks (DQN) for Atari games

- AlphaGo and AlphaZero for board games

- Visual navigation in complex 3D environments

- Robotic manipulation tasks

Here's a simplified diagram of a CNN-RL integration for visual navigation:

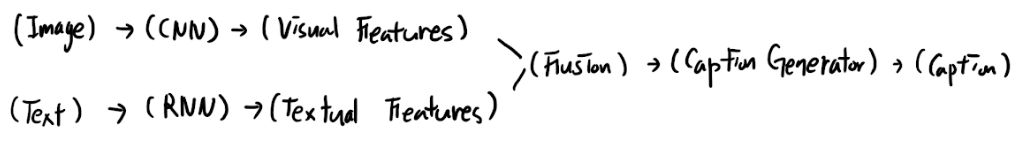

Utilization of CNNs in Multimodal Learning

CNNs play a crucial role in multimodal learning, often serving as feature extractors for visual data. Here's an example of a multimodal architecture for image captioning:

This approach combines CNN-based visual features with RNN-based textual features to generate image captions.

Future Prospects for CNNs

Exploration of New Application Domains

CNNs are being applied to various new domains. Here's a table showcasing some emerging applications:

| Domain | Application | Potential Impact |

|---|---|---|

| Medical Imaging | Disease detection, organ segmentation | High |

| Satellite Imagery | Environmental monitoring, urban planning | High |

| Materials Science | Material property prediction | Medium |

| Astronomy | Galaxy classification, exoplanet detection | Medium |

| Art and Creativity | Style transfer, image generation | Medium |

Hardware Optimization and Edge Computing

The future of CNNs is closely tied to hardware advancements. Here's a comparison of different hardware platforms for CNN inference:

| Platform | Power Consumption | Inference Speed | Cost |

|---|---|---|---|

| CPU | High | Slow | Low |

| GPU | Very High | Fast | High |

| FPGA | Medium | Medium | Medium |

| ASIC (e.g., TPU) | Low | Very Fast | High |

| Neuromorphic Hardware | Very Low | Fast | Medium |

Edge AI frameworks are being developed to optimize CNN deployment on resource-constrained devices, enabling real-time inference for various applications.

Ethical Considerations and Responsible AI Development

As CNNs become more prevalent, ethical considerations are gaining importance. Key areas of focus include:

- Bias and fairness

- Privacy preservation

- Environmental impact

- Transparency and accountability

Researchers are developing frameworks and methodologies for ethical AI development, including:

- Fairness-aware learning algorithms

- Privacy-preserving machine learning techniques

- Green AI initiatives

- Explainable AI frameworks

These efforts aim to ensure that CNN technology is developed and deployed responsibly, maximizing its benefits while minimizing potential risks and negative impacts.