Getting Started with n8n: Your Ultimate Workflow Automation Tool

Core Concept

n8n, short for 'nodemation', is a tool that allows users to easily design and execute complex workflows through a node-based visual interface.

Key Features

Visual Flow Builder

Offers a user-friendly drag-and-drop interface, enabling even users without technical knowledge to easily construct workflows.

Diverse Node Support

Provides over 400 pre-built nodes, allowing connections to various applications and services. Key node types include Trigger, Action, Function, and Integration nodes.

AI Integration

Integrates with AI services like OpenAI to automate tasks such as text summarization and natural language processing.

Error Handling and Debugging

Offers functionality to handle and debug errors that occur during workflow execution.

Hosting Options

Provides both self-hosting and cloud hosting options to suit users' environments and requirements.

Low-code Support

Allows many tasks to be performed without coding, while also enabling the addition of JavaScript for implementing complex logic when needed.

Use Cases

n8n is primarily used in scenarios such as:

- Data integration: Collecting and centrally processing/storing data from multiple services

- Building notification and alert systems

- Automating data transformation and processing

- Integrating and automating various services

n8n is a powerful workflow automation tool that can be used by individuals or within companies. While it's a paid service, it can be used for free when self-hosted.

Installation Method (Windows 10)

Visit self-hosted-ai-starter-kit for instructions on installing n8n along with Ollama on your local PC or server. If you don't need a local LLM like Ollama and can use external APIs, you can simply install and run n8n using Node.js.

In this post, we'll install it along with Ollama.

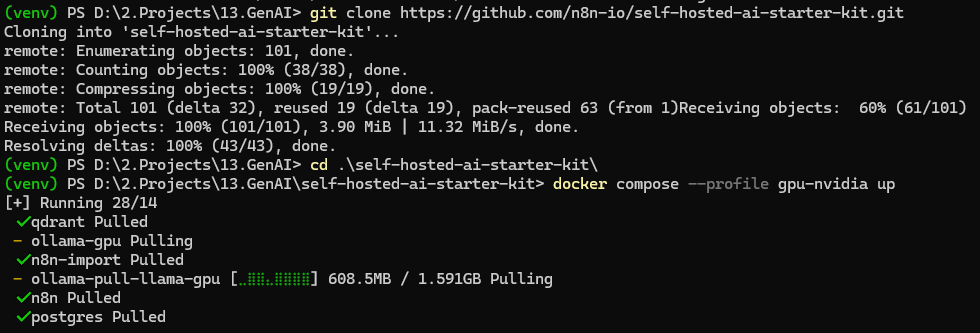

Quick Installation

Basically, follow the method in the link above:

git clone https://github.com/n8n-io/self-hosted-ai-starter-kit.git

cd self-hosted-ai-starter-kit

docker compose --profile gpu-nvidia up # To run Ollama using GPU

docker compose --profile cpu up # To use CPU

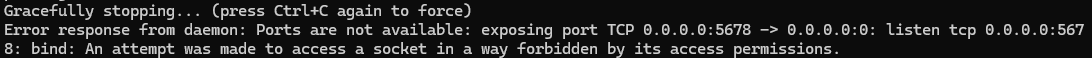

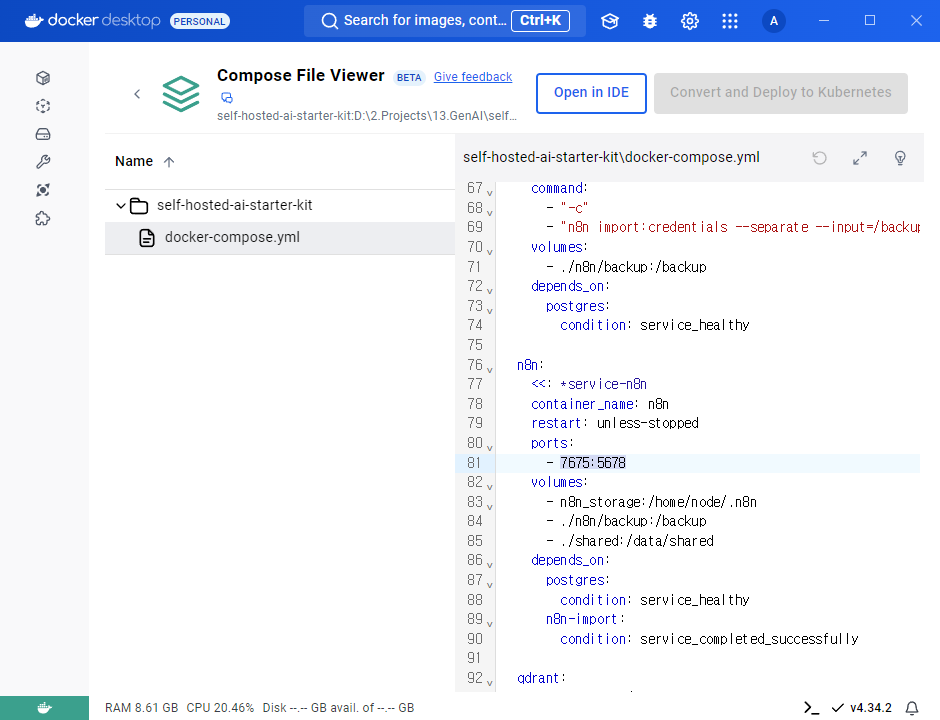

Troubleshooting

By default, n8n uses port 5678, which might already be in use.

In this case, you need to modify the port. Change port 5678 to another desired port in the docker configuration to resolve the issue.

Basic Operation Check

http://localhost:5678/workflow/srOnR8PAY3u4RSwb is a basic chat workflow using Ollama. You can learn about workflows through this simple example.

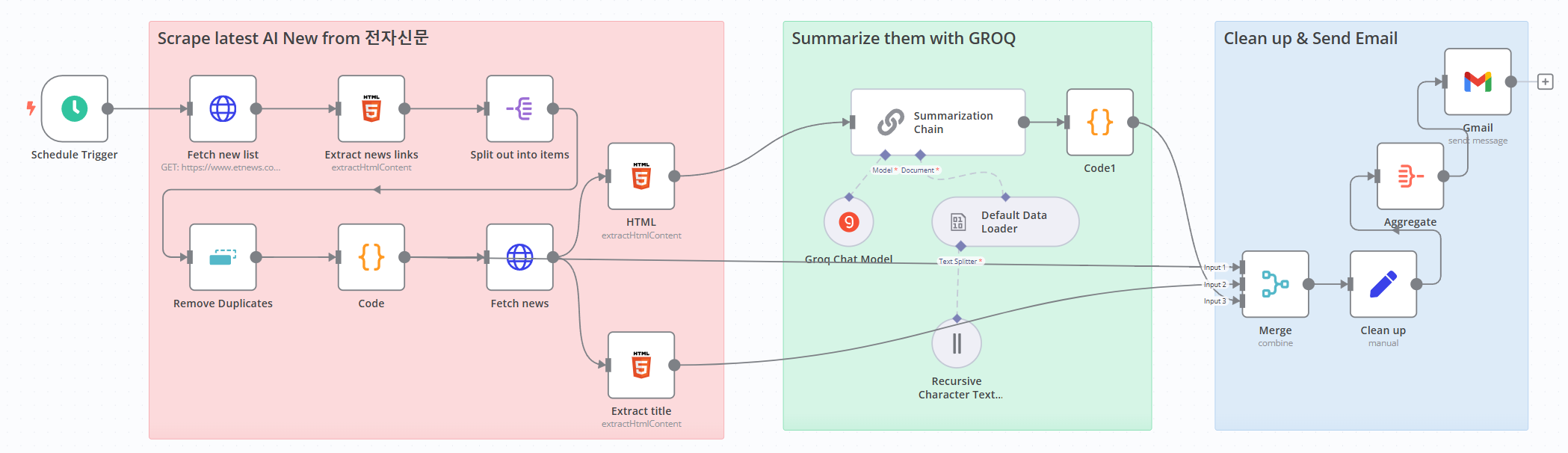

News Scraping Workflow

The following is a news scraping workflow I created. When I have time, I'll explain it in detail, but for now, I recommend taking a look at below image. It reads the S/W section from Korea's Electronic Times daily and sends an email.