Machine Learning Algorithms Demystified

1. Supervised Learning Algorithms

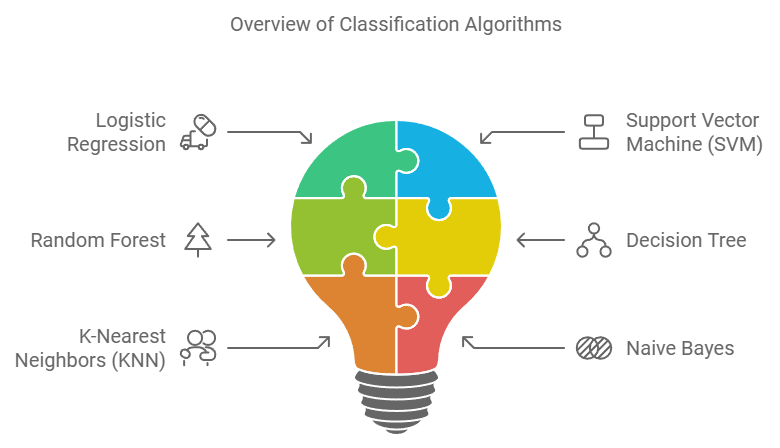

Classification Algorithms

Logistic Regression Logistic Regression is primarily used for binary classification problems. It passes a linear combination of input features through a sigmoid function to output a probability between 0 and 1. This probability is then used to predict the class. It's simple, interpretable, and has a low risk of overfitting, making it widely used. However, it can't directly model non-linear relationships.

Support Vector Machine (SVM) SVM is an algorithm that finds the optimal hyperplane to separate data points into different classes. It operates by maximizing the margin and can handle non-linear classification through the kernel trick. SVM is effective in high-dimensional spaces and relatively robust against overfitting. However, it can be computationally expensive for large datasets.

Random Forest Random Forest is an ensemble learning method that creates multiple decision trees and combines their results for classification. Each tree is trained on a subset of data and features, reducing overfitting and improving generalization. It provides feature importance and works well on various types of data. However, the model can be difficult to interpret.

Decision Tree Decision Tree is an algorithm that performs classification by splitting data based on features into a tree-like structure. Each internal node represents a test on a feature, while each leaf node represents a class label. It's intuitive, easy to interpret, and can model non-linear relationships. However, it's prone to overfitting and can be unstable with small variations in data.

K-Nearest Neighbors (KNN) KNN classifies a data point based on the majority class of its K nearest neighbors. It uses a distance metric (e.g., Euclidean distance) to find neighbors. KNN is simple, intuitive, and can capture complex decision boundaries. However, it can be computationally expensive for large datasets and is sensitive to the scale of features.

Naive Bayes Naive Bayes uses Bayes' theorem with the "naive" assumption of feature independence to perform classification. It assumes each feature contributes independently to the probability of a class. It's fast, works well with small amounts of training data, and is particularly effective in text classification. However, the independence assumption is often violated in real-world scenarios.

Regression Algorithms

.png)

Linear Regression Linear Regression models the linear relationship between input variables and the output variable. It uses the least squares method to find the line (or hyperplane) that minimizes the error. It's simple, interpretable, and computationally fast. However, it can't directly model non-linear relationships and is sensitive to outliers.

Polynomial Regression Polynomial Regression uses polynomial terms of input variables to model non-linear relationships. It's an extension of linear regression, adding powers of input variables as new features. It can capture complex relationships but risks overfitting, and the choice of polynomial degree is crucial.

Ridge Regression Ridge Regression is a variant of linear regression that uses L2 regularization to prevent overfitting. It adds the sum of squared coefficients as a penalty to the cost function. This keeps all features but shrinks coefficients towards zero. It helps with multicollinearity and improves the model's generalization performance.

Lasso Regression Lasso Regression uses L1 regularization for feature selection. It adds the sum of absolute values of coefficients as a penalty to the cost function. This can drive some coefficients exactly to zero, effectively performing feature selection. It's useful for creating simpler models and identifying important features.

2. Unsupervised Learning Algorithms

.png)

K-Means K-Means divides data into K clusters by iteratively updating cluster centers (centroids) and assigning data points to the nearest center. It's simple, efficient, and applicable to large datasets. However, it's sensitive to initial centroid selection and assumes spherical cluster shapes.

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) DBSCAN is a density-based clustering algorithm that defines clusters as high-density regions. It uses concepts of core points, border points, and noise points. It doesn't require specifying the number of clusters beforehand and can find arbitrarily shaped clusters. It's robust to noise but may struggle with clusters of varying densities.

Hierarchical Clustering Hierarchical Clustering creates a tree-like structure (dendrogram) of data points. It can use either a bottom-up (agglomerative) or top-down (divisive) approach. It provides a hierarchy of clusters, allowing for different levels of granularity. However, it can be computationally expensive for large datasets, and once merged or split, clusters are not reconsidered.

Principal Component Analysis (PCA) PCA is a technique for dimensionality reduction that preserves as much variance in the data as possible. It finds the principal axes (components) of data and uses them as a new coordinate system. It's useful for data compression, noise reduction, and visualization. However, it's limited to linear transformations and can be difficult to interpret.

t-SNE (t-Distributed Stochastic Neighbor Embedding) t-SNE is a non-linear dimensionality reduction technique used for visualizing high-dimensional data in low dimensions (usually 2D or 3D). It converts similarities between data points to probability distributions and tries to preserve these distributions in lower dimensions. It preserves local structure well but can be computationally expensive and challenging to apply to large datasets.

3. Reinforcement Learning Algorithms

Q-Learning Q-Learning is a model-free reinforcement learning algorithm that learns the state-action value function (Q-function) to find the optimal policy. It updates Q-values for each state-action pair as it learns. Q-Learning can learn without a model of the environment and is guaranteed to converge, but it may have high memory requirements for large state spaces.

SARSA (State-Action-Reward-State-Action) SARSA is similar to Q-Learning but updates Q-values based on the action actually taken. It's an on-policy algorithm, learning while following the current policy. SARSA tends to learn more conservative policies, which can be useful in environments where exploration is risky, but it may take longer to find the optimal policy.

Deep Q-Learning Deep Q-Learning uses neural networks to approximate the Q-function. It employs experience replay and target networks to improve learning stability. This algorithm can handle complex state spaces and automatically perform feature extraction. However, learning can be unstable, and hyperparameter tuning is crucial.

4. Semi-Supervised Learning Algorithms

Self-Training Self-Training involves training a model on a small amount of labeled data, then using it to make predictions on unlabeled data. High-confidence predictions are added to the training set. It's simple and applicable to various models, but initial prediction errors can be amplified.

Co-Training Co-Training trains separate classifiers on two or more independent feature sets. Each classifier updates itself using the high-confidence predictions of the other classifiers. It can leverage information from different views of the data, but finding truly independent feature sets can be challenging.

5. Ensemble Learning Algorithms

Bagging (Bootstrap Aggregating) Bagging creates multiple samples from the original dataset with replacement, trains individual models on each sample, and aggregates the results. It reduces variance and prevents overfitting, forming the basis for Random Forests. Predictions are typically averaged or voted on. Bagging is robust to noise but can be difficult to interpret.

Boosting Boosting sequentially trains weak learners, focusing on the errors of previous models. Each step gives more weight to misclassified instances. Popular algorithms include AdaBoost and Gradient Boosting. Boosting often achieves high predictive performance but risks overfitting and can be computationally expensive.

Stacking Stacking uses predictions from multiple base models as inputs to train a meta-model. It can combine diverse types of models to leverage their individual strengths. Stacking can achieve high predictive performance but results in complex model structures and high computational costs.

6. Deep Learning Algorithms

.png)

Convolutional Neural Network (CNN) CNNs are primarily used for image processing. They consist of convolutional layers, pooling layers, and fully connected layers, effectively learning local features in images. CNNs automatically perform feature extraction and can learn spatial hierarchies, but they require large amounts of data and computational resources.

Recurrent Neural Network (RNN) RNNs are specialized for processing sequential data. They have a recurrent structure that passes information from previous steps to the current step, allowing them to learn temporal dependencies. RNNs are used in natural language processing and time series prediction but can suffer from information loss (vanishing gradient) in long sequences.

Long Short-Term Memory (LSTM) LSTMs are a type of RNN designed to address the information loss problem in long sequences. They use gate mechanisms to selectively remember and forget information. LSTMs can effectively learn long-term dependencies but have a complex structure and high computational cost.

Autoencoder Autoencoders learn by compressing (encoding) input data and then reconstructing (decoding) it. They can learn important features of data and reduce dimensionality. Autoencoders are used for noise reduction, feature extraction, and anomaly detection, but the learned representations can be difficult to interpret.

Generative Adversarial Network (GAN) GANs consist of two competing neural networks: a generator that creates fake data and a discriminator that tries to distinguish real from fake data. Through this process, GANs can generate highly realistic fake data. They're used for image generation, style transfer, and more, but training can be unstable and prone to mode collapse.

7. Other Algorithms

Association Rule Learning Association Rule Learning discovers relationships between items in large datasets. Popular algorithms include Apriori and FP-Growth. It's used in market basket analysis and recommendation systems. While it can find patterns in large datasets, it can be computationally expensive and may generate many rules.

Anomaly Detection Anomaly Detection identifies unusual patterns or data points in a dataset. It includes statistical methods, distance-based methods, and density-based methods. It's used in fraud detection and system monitoring, but defining the boundary between normal and abnormal can be challenging.

These diverse algorithms each have their strengths and weaknesses. The choice of algorithm depends on the problem characteristics, data properties, computational resources, and other factors. Often, combining multiple algorithms or using hybrid approaches can yield better performance.