Overview of Classifier-Free Guidance (CFG)

Introduction to Classifier-Free Guidance

Classifier-Free Guidance (CFG) is a technique used in diffusion models to improve the quality and controllability of generated samples. It has become a crucial component in state-of-the-art text-to-image generation systems, such as Stable Diffusion. CFG allows for better alignment between the generated content and the input prompt or conditioning information.

The basic idea behind CFG is to interpolate between the unconditional and conditional diffusion processes during sampling. This interpolation is controlled by a guidance scale, which determines how much influence the conditioning information has on the generation process. A higher guidance scale typically results in outputs that more closely match the input prompt, but can also lead to artifacts and reduced sample diversity.

CFG works by using two forward passes through the diffusion model: one with the conditioning information (e.g., text prompt) and one without. The difference between these two passes is then used to guide the sampling process. This approach has several advantages over previous methods that required training a separate classifier:

- Simplicity: CFG can be applied to existing diffusion models without the need for additional training or model components.

- Flexibility: The guidance scale can be adjusted at inference time, allowing for fine-tuning of the trade-off between prompt adherence and sample quality.

- Improved performance: CFG has been shown to produce higher-quality samples compared to classifier-based guidance methods.

However, CFG is not without its limitations. As the guidance scale increases, the generated samples can become less diverse and may exhibit artifacts such as oversaturation or unrealistic details. These issues have led researchers to explore improvements and alternatives to the standard CFG approach.

One such improvement is the recently proposed CFG++, which addresses some of the limitations of the original CFG method. CFG++ introduces a simple modification to the CFG algorithm that results in smoother generation trajectories and improved sample quality, especially at higher guidance scales.

The importance of CFG in modern diffusion models cannot be overstated. It has become a standard component in many text-to-image generation pipelines and has been instrumental in achieving the impressive results seen in recent years. Understanding CFG and its variants is crucial for anyone working with or studying diffusion models.

In the following sections, we will delve deeper into the technical details of CFG, explore its implementation and usage in popular diffusion model libraries, examine the recent CFG++ improvement, and discuss the broader implications and future directions for guidance techniques in diffusion models.

Technical Details of Classifier-Free Guidance

To understand Classifier-Free Guidance (CFG) in depth, we need to examine its mathematical foundations and how it integrates with the diffusion process. Diffusion models work by gradually adding noise to data and then learning to reverse this process. The sampling procedure involves iteratively denoising the data, starting from pure noise and ending with a clean sample.

The core idea of CFG is to modify this denoising process based on conditioning information. Let's break down the key components:

-

Unconditional Diffusion Process: In the unconditional case, the denoising step at time t can be expressed as:

Where is the noisy sample at time t, and is the learned denoising function.

-

Conditional Diffusion Process: When conditioning information y is available, the denoising step becomes:

-

CFG Interpolation: CFG combines these two processes using a guidance scale w:

This formula shows how CFG interpolates between the unconditional and conditional processes. When w = 0, we get the unconditional process, and as w increases, the conditional information has more influence.

The guidance scale w is a crucial parameter that controls the strength of the conditioning. Higher values of w lead to samples that more closely match the conditioning information but can also result in less diverse and potentially lower-quality samples.

Implementing CFG requires running the model twice at each denoising step: once with the conditioning and once without. This doubles the computational cost but allows for flexible control over the generation process.

One of the key advantages of CFG is that it can be applied to pre-trained diffusion models without requiring any changes to the model architecture or additional training. This has made it a popular choice for improving the controllability of existing diffusion models.

However, CFG is not without its challenges. As the guidance scale increases, the sampling process can deviate significantly from the learned diffusion trajectory, leading to artifacts and reduced sample quality. This is particularly noticeable in the early stages of the denoising process, where the influence of the conditioning information can be overly strong.

Recent research has focused on addressing these limitations. For example, the CFG++ method proposes a simple modification to the CFG algorithm that aims to keep the sampling process closer to the manifold of natural images.

To implement CFG in practice, most diffusion model libraries provide built-in support. For example, in the Hugging Face Diffusers library, CFG is typically handled automatically by the pipeline classes. Users can control the guidance scale through parameters like guidance_scale when calling the pipeline.

Here's a simplified example of how CFG might be implemented in Python:

def classifier_free_guidance(model, x_t, t, y, guidance_scale):

# Unconditional forward pass

unconditional = model(x_t, t)

# Conditional forward pass

conditional = model(x_t, t, y)

# Apply guidance

return unconditional + guidance_scale * (conditional - unconditional)

This function would be called at each step of the sampling process, with the guidance_scale parameter controlling the strength of the conditioning.

Understanding the technical details of CFG is crucial for researchers and practitioners working with diffusion models. It provides insights into how these models can be controlled and optimized, and forms the basis for developing improved guidance techniques.

CFG++: An Improved Guidance Method

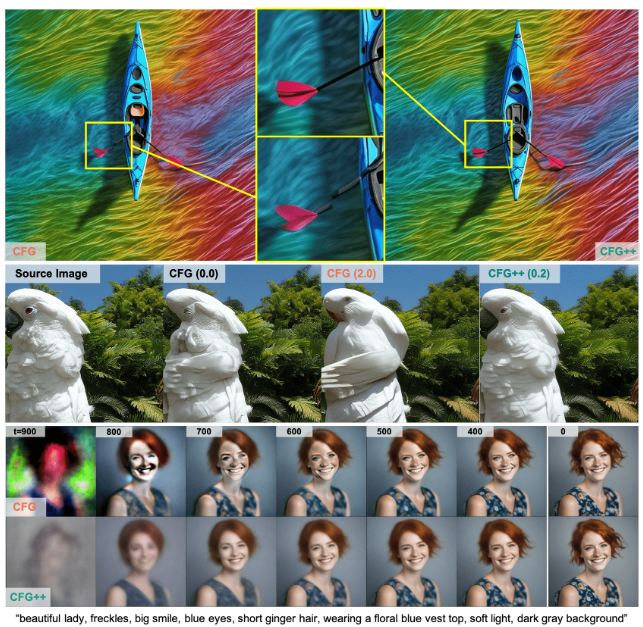

CFG++ is a recent improvement to the original Classifier-Free Guidance (CFG) method, introduced to address some of the limitations of CFG, particularly at higher guidance scales. The key insight behind CFG++ is a surprisingly simple modification to the CFG algorithm that leads to significant improvements in sample quality and generation stability.

The main difference between CFG and CFG++ lies in how the renoising process is handled after applying Tweedie's formula. In the original CFG, the renoising step uses the same noise for both the conditional and unconditional branches. CFG++, on the other hand, uses the unconditional noise for the renoising process after applying Tweedie's formula.

This simple change results in several important improvements:

-

Smoother Generation Trajectory: CFG++ leads to a smoother trajectory during the generation process. This is particularly noticeable when visualizing the intermediate steps of the diffusion process.

-

Superior Sample Quality: The generated samples from CFG++ exhibit better quality compared to those produced by standard CFG, especially at higher guidance scales.

-

Improved DDIM Inversion: CFG++ can be extended to significantly improve DDIM inversion, which is known to be prone to failure when using standard CFG.

The theoretical analysis of CFG++ suggests that this improvement originates from keeping the sampling process closer to the manifold of natural images. By using the unconditional noise for renoising, CFG++ reduces the deviation from the learned diffusion trajectory, which is particularly important at higher guidance scales.

To illustrate the difference between CFG and CFG++, let's consider a simplified pseudocode implementation:

# Standard CFG

def cfg_step(x_t, t, y, guidance_scale):

noise = generate_noise(x_t.shape)

x_t_noisy = add_noise(x_t, noise)

unconditional = denoise_step(x_t_noisy, t)

conditional = denoise_step(x_t_noisy, t, y)

return unconditional + guidance_scale * (conditional - unconditional)

# CFG++

def cfg_plus_plus_step(x_t, t, y, guidance_scale):

noise = generate_noise(x_t.shape)

x_t_noisy_uncond = add_noise(x_t, noise)

x_t_noisy_cond = add_noise(x_t, noise)

unconditional = denoise_step(x_t_noisy_uncond, t)

conditional = denoise_step(x_t_noisy_cond, t, y)

guided = unconditional + guidance_scale * (conditional - unconditional)

# Key difference: Use unconditional noise for renoising

return add_noise(guided, noise)

The main difference is in the final step of CFG++, where the unconditional noise is used for renoising the guided sample.

The benefits of CFG++ are particularly evident in tasks such as text-to-image generation and real image editing. For text-to-image generation, CFG++ allows for higher guidance scales without the degradation in sample quality often seen with standard CFG. This means that generated images can more closely match the input text prompts while maintaining overall image quality and coherence.

In the context of real image editing, CFG++ shows significant improvements in DDIM inversion. DDIM inversion is a technique used to find the latent representation of a real image in the diffusion model's latent space. This is crucial for tasks like image editing and style transfer. Standard CFG often struggles with DDIM inversion, leading to poor results when attempting to edit real images. CFG++ addresses this issue, enabling more accurate and stable image editing capabilities.

To visualize the improvements offered by CFG++, researchers have used various comparison techniques:

-

Side-by-side image comparisons: Generated images using CFG and CFG++ are placed side by side, allowing for direct visual comparison of quality and adherence to the input prompt.

-

Trajectory visualizations: The intermediate steps of the diffusion process are visualized for both CFG and CFG++, showing how CFG++ maintains a smoother, more stable trajectory.

-

LPIPS distance matching: To ensure fair comparisons between CFG and CFG++, researchers have used the LPIPS (Learned Perceptual Image Patch Similarity) metric to match the guidance scales between the two methods.

These visualizations and comparisons consistently demonstrate the advantages of CFG++ across various tasks and guidance scale values.

The introduction of CFG++ represents an important step forward in the development of guidance techniques for diffusion models. Its ability to maintain high sample quality at increased guidance scales opens up new possibilities for fine-grained control in generative tasks. As the field of diffusion models continues to evolve rapidly, it's likely that we'll see further refinements and alternatives to CFG++ in the near future.

Implementation and Usage in Popular Libraries

Implementing Classifier-Free Guidance (CFG) and its improved version, CFG++, in popular diffusion model libraries is crucial for making these techniques accessible to researchers and practitioners. In this section, we'll explore how CFG is implemented and used in some of the most popular libraries, with a focus on the Hugging Face Diffusers library.

The Hugging Face Diffusers library is one of the most widely used toolkits for working with diffusion models. It provides a high-level API for using pre-trained diffusion models and implements various sampling techniques, including CFG. Here's how CFG is typically used in Diffusers:

-

Pipeline Abstraction: Diffusers uses a pipeline abstraction to simplify the process of generating samples. Most text-to-image pipelines in Diffusers, such as StableDiffusionPipeline, automatically incorporate CFG.

-

Guidance Scale Parameter: The strength of CFG is controlled through the

guidance_scaleparameter when calling the pipeline. For example:from diffusers import StableDiffusionPipeline import torch pipeline = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16) pipeline = pipeline.to("cuda") prompt = "a photograph of an astronaut riding a horse" image = pipeline(prompt, guidance_scale=7.5).imagesIn this example,

guidance_scale=7.5sets the strength of the classifier-free guidance. -

Scheduler Implementation: The actual implementation of CFG is typically handled by the scheduler classes in Diffusers. These schedulers manage the noise schedule and implement the denoising process, including the application of guidance.

-

Custom Pipelines: For more advanced use cases, Diffusers allows users to create custom pipelines where they can have more fine-grained control over the CFG process.

While Diffusers doesn't currently have a built-in implementation of CFG++ as of the last update, the modular nature of the library means that implementing CFG++ could be done by creating a custom scheduler or modifying existing ones.

Other popular libraries and frameworks also provide support for CFG:

-

PyTorch: As a low-level framework, PyTorch doesn't provide built-in CFG implementations, but it's the foundation upon which most diffusion model libraries are built. Implementing CFG in PyTorch involves manually coding the guidance process, which gives researchers full control but requires more effort.

-

JAX/Flax: Similar to PyTorch, JAX and Flax are lower-level frameworks that don't provide built-in CFG implementations. However, they're popular choices for researchers due to their speed and flexibility, especially on TPUs.

-

TensorFlow: While less common for diffusion models compared to PyTorch, TensorFlow can be used to implement CFG. The process would be similar to PyTorch, requiring manual implementation of the guidance mechanism.

-

FastAI: FastAI, while not specifically designed for diffusion models, can be used in conjunction with PyTorch to implement CFG. It provides high-level APIs that can simplify some aspects of working with deep learning models.

Implementing CFG++ in these libraries would typically involve modifying the existing CFG implementation to use the unconditional noise for renoising after applying Tweedie's formula. This would require careful consideration of how the noise is generated and applied during the sampling process.

For researchers and developers looking to experiment with CFG++, here's a high-level outline of how it might be implemented:

- Modify the sampling loop to generate and store the unconditional noise separately.

- Implement the CFG++ algorithm, ensuring that the unconditional noise is used for renoising after the guidance step.

- Adjust any relevant hyperparameters or scheduling logic to account for the changes in the sampling process.

It's worth noting that while CFG++ has shown promising results, it's still a relatively new technique. As such, it may not be immediately available in all libraries and frameworks. Researchers and developers interested in using CFG++ may need to implement it themselves or wait for official support in their preferred libraries.

When implementing or using CFG and CFG++, there are several best practices and considerations to keep in mind:

-

Guidance Scale Tuning: The optimal guidance scale can vary depending on the model, dataset, and specific use case. It's often necessary to experiment with different values to find the best balance between prompt adherence and sample quality.

-

Computational Efficiency: Both CFG and CFG++ require two forward passes through the model at each denoising step. This doubles the computational cost compared to unconditional sampling. When implementing these techniques, it's important to consider the trade-off between improved quality and increased computation time.

-

Memory Management: The additional forward pass required by CFG methods can increase memory usage. This is particularly important to consider when working with large models or on hardware with limited memory.

-

Integration with Other Techniques: CFG and CFG++ often need to be integrated with other sampling techniques or model architectures. Ensuring compatibility and optimal performance when combining multiple techniques can be challenging.

-

Evaluation and Comparison: When implementing new guidance techniques like CFG++, it's crucial to have robust evaluation methods in place. This might include perceptual metrics, FID scores, and human evaluation to compare the results with existing methods.

As the field of diffusion models continues to evolve rapidly, we can expect to see further improvements and alternatives to CFG and CFG++. Libraries like Hugging Face Diffusers are likely to incorporate these advancements as they become established in the research community. Staying up-to-date with the latest developments and being prepared to implement or adapt new techniques will be crucial for researchers and practitioners working with diffusion models.

Implications and Future Directions

The development of Classifier-Free Guidance (CFG) and its improved variant CFG++ has significant implications for the field of diffusion models and generative AI as a whole. These techniques have not only enhanced the quality and controllability of generated samples but have also opened up new possibilities for creative applications and scientific research. In this section, we'll explore the broader implications of CFG and CFG++ and discuss potential future directions for guidance techniques in diffusion models.

Implications for Generative AI

-

Improved Control and Fidelity: CFG and CFG++ have dramatically improved our ability to control the output of diffusion models. This enhanced control allows for more precise text-to-image generation, enabling users to create images that more closely match their intended concepts. This has implications for various fields, including:

- Digital Art and Design: Artists and designers can now use text prompts to generate more accurate visual representations of their ideas, potentially streamlining the creative process.

- Marketing and Advertising: The ability to quickly generate high-quality, customized images based on textual descriptions could revolutionize content creation for marketing campaigns.

- Education and Visualization: Complex concepts can be more easily illustrated through text-guided image generation, enhancing educational materials and scientific visualizations.

-

Advancements in Image Editing: The improvements in DDIM inversion brought by CFG++ have significant implications for image editing tasks. This could lead to more powerful and intuitive tools for:

- Photo Manipulation: More accurate and stable editing of real images, allowing for complex modifications while maintaining overall image coherence.

- Style Transfer: Enhanced capabilities for applying artistic styles to photographs or changing the style of existing artworks.

- Content Creation: Easier generation of variations on existing images, useful for creating diverse content sets or exploring design alternatives.

-

Ethical Considerations: As these techniques make it easier to generate highly realistic and controllable images, they also raise important ethical questions:

- Misinformation and Deep Fakes: The ability to create convincing fake images could be misused for spreading misinformation or creating deep fakes.

- Copyright and Ownership: As AI-generated images become more prevalent, questions about copyright and ownership of these images become more complex.

- Bias and Representation: The improved control over image generation also means that biases in the training data or prompts could be more strongly reflected in the outputs.

-

Computational Resources: The increased computational requirements of CFG methods have implications for:

- Hardware Development: There may be increased demand for more powerful GPUs and specialized hardware to run these models efficiently.

- Cloud Computing: The need for substantial computational resources could drive further growth in cloud-based AI services.

- Energy Consumption: The energy requirements for training and running these models at scale raise concerns about environmental impact.

Future Directions

-

Further Refinements to Guidance Techniques: While CFG++ represents a significant improvement over standard CFG, there's likely room for further refinement. Future research might focus on:

- Adaptive Guidance: Developing methods that automatically adjust the guidance scale throughout the sampling process for optimal results.

- Multi-Modal Guidance: Extending guidance techniques to incorporate multiple types of conditioning information simultaneously (e.g., text, images, and audio).

- Efficiency Improvements: Finding ways to achieve the benefits of CFG++ with reduced computational overhead.

-

Integration with Other AI Techniques: The success of CFG in diffusion models suggests potential applications in other areas of AI:

- Reinforcement Learning: Adapting CFG-like techniques for guiding policy learning in RL algorithms.

- Natural Language Processing: Exploring how similar guidance methods could improve text generation or translation tasks.

- Audio Generation: Applying CFG principles to diffusion models for speech synthesis or music generation.

-

Theoretical Understanding: While CFG and CFG++ have shown empirical success, there's still much to understand about why they work so well. Future research might focus on:

- Mathematical Analysis: Developing a more rigorous theoretical foundation for CFG methods.

- Generalization Studies: Investigating how well CFG techniques generalize across different model architectures and problem domains.

- Optimal Scaling: Deriving principled methods for determining the optimal guidance scale for different tasks and models.

-

Addressing Limitations: Current CFG methods still have limitations that future research could address:

- Diversity Preservation: Developing techniques to maintain sample diversity even at high guidance scales.

- Computational Efficiency: Finding ways to achieve the benefits of CFG without the need for multiple forward passes.

- Robustness to Adversarial Prompts: Improving the stability of guided generation when faced with challenging or adversarial input prompts.

-

Applications in Scientific Research: The improved control offered by CFG methods could have applications beyond creative tasks:

- Drug Discovery: Guiding molecular generation processes for more targeted exploration of chemical spaces.

- Materials Science: Assisting in the design of new materials with specific properties.

- Protein Folding: Enhancing the prediction and design of protein structures.

-

Ethical AI and Governance: As these techniques become more powerful, there will likely be increased focus on:

- Developing Ethical Guidelines: Establishing best practices and ethical guidelines for the use of highly controllable generative models.

- Watermarking and Attribution: Creating robust methods for identifying AI-generated content and attributing it appropriately.

- Bias Mitigation: Researching ways to reduce unwanted biases in guided generation processes.

-

Human-AI Collaboration: The enhanced controllability offered by CFG methods opens up new possibilities for human-AI collaboration:

- Interactive Design Tools: Developing interfaces that allow users to iteratively refine generated images through natural language feedback.

- AI-Assisted Creativity: Creating tools that can serve as creative partners, offering suggestions and variations based on human input.

- Personalized Content Creation: Tailoring generative models to individual users' styles and preferences through guided fine-tuning.

-

Cross-Disciplinary Applications: The principles behind CFG could inspire innovations in fields beyond traditional AI:

- Quantum Computing: Exploring how guidance techniques might be applied to quantum algorithms or simulations.

- Neuroscience: Investigating parallels between CFG in AI and attention mechanisms in biological brains.

- Social Sciences: Studying how the ability to generate highly specific content might impact social dynamics and information spread.

In conclusion, the development of CFG and CFG++ represents a significant milestone in the evolution of diffusion models and generative AI. These techniques have not only improved the quality and controllability of generated content but have also opened up new avenues for research and application. As the field continues to advance, we can expect to see further refinements to guidance techniques, novel applications across various domains, and increased attention to the ethical and societal implications of these powerful generative capabilities. The future of guided diffusion models is likely to be characterized by more precise control, enhanced efficiency, and deeper integration with human creativity and scientific inquiry.